Driver Assistance Systems for the City: Seeing and Recognizing Dangers in the Same Way as a Human

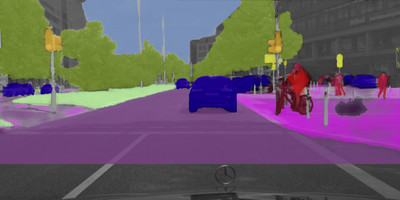

A traffic situation with pedestrians and cyclists and every object is clearly detected and identified. Thanks to scene-labeling. |

- Where is the danger? Scene labelling allows complex traffic situations to be reliably understood

- Has he stopped? Or is he walking? Identifying the intentions of crossing pedestrians

-

All clear? City-centre lane changing made easy

- Where next? Identifying intentions to turn or change lane

- Closing presentation of UR:BAN research project

STUTTGART -- Oct. 8, 2015: Cross-traffic, cyclists, crossing pedestrians, perhaps

engrossed with their smartphones, mothers with buggies, children playing

– city traffic places demands on drivers in many different situations

while at the same time posing risks of accident. Plenty of scope for

assistance systems that support the driver in addition to making urban

driving safer and less stressful. On the way to that goal, Daimler

researchers have achieved a breakthrough in connection with the UR:BAN

research initiative. Using so-called "scene labelling", the camera-based

system automatically classifies completely unknown situations and thus

detects all important objects for driver assistance – from cyclists

to pedestrians to wheelchair users. Researchers in the "Environment

Sensing" department showed their system thousands of photos from various

German cities. In the photos, they had manually precisely labelled 25

different object classes, such as vehicles, cyclists, pedestrians, streets,

pavements, buildings, posts and trees. On the basis of these examples, the

system learned automatically to correctly classify completely unknown

scenes and thus to detect all important objects for driver assistance, even

if the objects were highly hidden or far away. This is made possible by

powerful computers that are artificially neurally networked in a manner

similar to the human brain, so-called Deep Neural

Networks.

Consequently, the system

functions in a manner comparable to human sight. This, too, is based on a

highly complex neural system that links the information from the individual

sensory cells on the retina until a human is able to identify and

differentiate an almost unlimited number of objects. Scene labelling

transforms the camera from a mere measuring system into an interpretive

system, as multifunctional as the interplay between eye and brain. Prof.

Ralf Guido Herrtwich, Head of Driver Assistance and Chassis Systems, Group

Research and Advance Development at Daimler AG: "The tremendous increase in

computing power in recent years has brought closer the day when vehicles

will be able to see their surroundings in the same way as humans and also

correctly understand complex situations in city traffic.” To

advance this system quickly Daimler continues researching together with

partners to achieve the vision of the accident-free

driving.

Future driver

assistance functions were demonstrated in test vehicles

At the closing event of the collaborative research project UR:BAN, short for "Urban Space: User-friendly Assistance Systems and Network Management", the Daimler researchers presented convincing results from a total of five different test vehicles. In addition to a real-time demonstration of scene labelling, another test vehicle showed imaging radar systems and the new, fascinating possibilities they offer in urban environments. It was shown that radar sensors are now capable of comprehensively resolving and visualising not just any dynamic object, but also every static environment. The particular properties of radar waves mean that the system can also function in fog and bad weather. Moreover, the so-called micro-doppler allows the signatures of moving pedestrians and cyclists to be unambiguously classified. In addition, it was demonstrated at the trade fair how environment data from radar and camera sensors are merged by sensor fusion to form an environment model. The model takes account not only of the locations and speeds of the various road users, but also of attributes such as the type and size of the objects. The environment model also makes allowance for incomplete sensor data as well as missing information, as is typically the case in actual road traffic.

At the closing event of the collaborative research project UR:BAN, short for "Urban Space: User-friendly Assistance Systems and Network Management", the Daimler researchers presented convincing results from a total of five different test vehicles. In addition to a real-time demonstration of scene labelling, another test vehicle showed imaging radar systems and the new, fascinating possibilities they offer in urban environments. It was shown that radar sensors are now capable of comprehensively resolving and visualising not just any dynamic object, but also every static environment. The particular properties of radar waves mean that the system can also function in fog and bad weather. Moreover, the so-called micro-doppler allows the signatures of moving pedestrians and cyclists to be unambiguously classified. In addition, it was demonstrated at the trade fair how environment data from radar and camera sensors are merged by sensor fusion to form an environment model. The model takes account not only of the locations and speeds of the various road users, but also of attributes such as the type and size of the objects. The environment model also makes allowance for incomplete sensor data as well as missing information, as is typically the case in actual road traffic.

The third test vehicle

included a system for the detection, classification and intention

identification of pedestrians and cyclists. Similarly to a human driver,

this system analyses head posture, body position and kerbside position to

predict whether a pedestrian intends to stay on the pavement or cross the

road. In dangerous situations, this allows an accident-preventing system

response to be triggered up to one second earlier than with currently

available systems.

A further

highlight that was demonstrated was how radar- and camera-based systems can

make lane-changing in city traffic safer and more comfortable. Following a

command from the driver, this system provides assisted lane-changing in a

speed range between 30 and 60 km/h. The system senses the environment as

well as the traffic in the lanes. The situational analysis predicts how the

scenario will develop and then enables the computed trajectory. This is

followed by assisted longitudinal and transverse control for changing lane.

The driver can tell intuitively from the instrument cluster whether or not

the requested lane change can be executed by the system. After the change

of lane has been successfully completed, longitudinal control with

lane-keeping function is resumed. The driver at all times has the option of

overruling the system by intervening with the steering, accelerator or

brakes.

The fifth test vehicle

showed the potential for predicting driver behaviour in relation to planned

lane changes or changes of direction. With regard to an imminent change of

lane, for example, glances over the shoulder are linked with driving

parameters that have already been sensed. A likely change of direction can

be predicted from the interplay between steering movement, reduction of

speed and map information. In the demonstration, the direction indicator

was then automatically activated to inform other road users as early as

possible.